iAsk AI’s Historic Benchmark Triumph Sets a New Standard for AI

By Pau Castillo

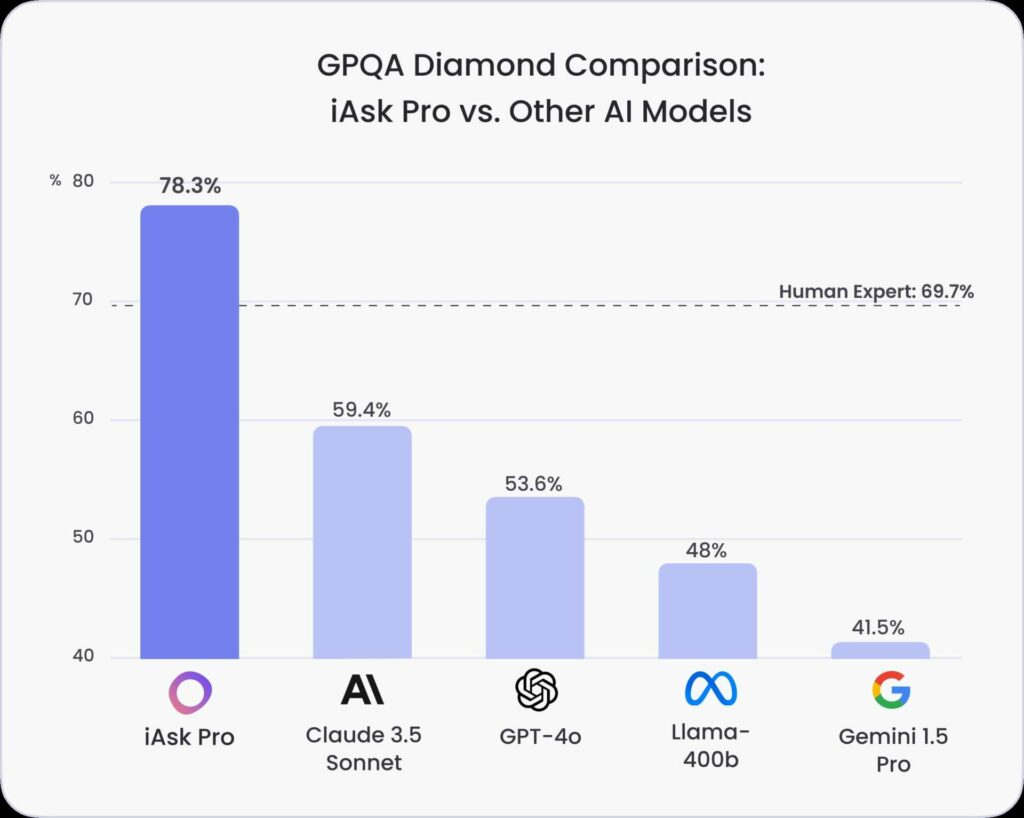

When it comes to breaking technological ground, few AI platforms have shaken the status quo like iAsk Pro. The latest model from iAsk AI has carved out a name for itself in everyday search and has also achieved the highest score on GPQA, the Graduate-Level Google-Proof Q&A Benchmark—an incredibly challenging test that measures AI competency in advanced scientific problem-solving. Surpassing major competitors like OpenAI’s top models and Anthropic’s Claude 3.5, iAsk Pro has set a precedent that could forever redefine the AI landscape.

What is GPQA and Why is iAsk Pro’s Achievement Groundbreaking?

The GPQA is one of the most rigorous tests designed for AI models. Comprising complex questions in biology, physics, and chemistry, it’s a test of both knowledge and reasoning that pushes even PhD-level experts to their limits. Curated by specialists, GPQA evaluates the ability to process questions that are resistant to simple web searches or superficial solutions—traits that make this benchmark a gold standard for assessing true AI intelligence.

GPQA’s dataset includes three variations: the extended set with 546 questions, the main set with 448, and the highly challenging Diamond subset of 198 questions. These Diamond questions are so difficult that human experts score only about 65% accuracy. Most leading AI models don’t fare much better, struggling to surpass 50%.

In a stunning display, iAsk Pro achieved an accuracy of 78.28% on the Diamond benchmark using pass@1—a metric that measures the model’s ability to get the correct answer on the first attempt. This performance is unmatched by any AI model to date.

Outperforming the Competition with Precision and Efficiency

In a head-to-head comparison, iAsk Pro outpaced competitors in accuracy and efficiency. While OpenAI’s o1 model required 64 attempts (measured by cons@64) to achieve a comparable accuracy, iAsk Pro managed a pass@1 score with a single attempt and achieved 83.83% accuracy with cons@5, which measures majority-voting accuracy over five attempts. In practical terms, this means iAsk Pro provides the right answers faster and more reliably than any of its peers. This kind of efficiency is crucial for applications where speed and accuracy make all the difference, especially in complex fields such as healthcare, scientific research, and technical problem-solving.

iAsk Pro leverages Chain of Thought (CoT) reasoning, a method that allows it to solve problems by breaking them into logical steps—a technique akin to how experts tackle intricate scientific challenges. This capability was pivotal in the model’s high scores on the GPQA Diamond benchmark, where nuanced understanding and layered reasoning are required for success.

Why GPQA is a Landmark in AI Testing

While many AI benchmarks measure knowledge recall or simple Q&A skills, GPQA raises the bar by focusing on graduate-level reasoning across specific scientific disciplines. Unlike general benchmarks such as the MMLU and MMLU-Pro, which span a wide range of topics, GPQA’s narrow scope in advanced biology, physics, and chemistry demands genuine subject-matter expertise. Questions in GPQA are engineered not just to test facts but to probe deeper, requiring models to perform multi-step reasoning and demonstrate a nuanced understanding of scientific principles.

The GPQA benchmark also includes several layers of validation to ensure its rigor. Constructed by domain experts and further validated by a second round of non-expert testing, the GPQA questions are “Google-proof,” meaning even unrestricted web access won’t easily yield answers. In trials with non-experts, accuracy hovered around 34%, illustrating just how challenging this dataset is, even for those with research tools at their disposal.

A New Era in AI-Driven Knowledge and Discovery

Achieving the highest score on GPQA positions iAsk Pro as a frontrunner in AI development, with implications reaching far beyond search engines. As models like iAsk Pro become capable of handling graduate-level scientific reasoning, they offer transformative potential for sectors that rely on complex data analysis. Imagine a platform that doesn’t just “find answers” but deeply understands the questions posed—equipped with the capability to aid researchers, accelerate medical breakthroughs, or streamline educational tools.

For those who have grown accustomed to conventional search engines, iAsk’s advancements signal a compelling shift and a glimpse into the future of intelligent problem-solving. iAsk Pro’s success illustrates not just an evolution in AI but an entirely new way of interacting with information. Whether it’s cracking the toughest science problems or providing answers to real-world questions with precision, iAsk Pro has shown that the limits of AI are being redefined in real-time.

Visit iAsk AI’s official page for an in-depth look at how the model stacks up against the competition and to see why iAsk Pro’s GPQA results represent a significant leap forward.

Rolling Stone UK newsroom and editorial staff were not involved in the creation of this featured content